Hey everyone! Rad here.

Let's be real for a second. We've all been there. It's 4 PM on a Friday, you're just trying to open a port for a new service, and instead of writing code, you're frantically clicking through the GCP Console or AWS dashboard. We call this "ClickOps." It's fun until you misclick, accidentally delete a production firewall rule, and suddenly your phone lights up like a Christmas tree.

I've been there. I once brought down a payment gateway because I thought I was editing the Staging load balancer. Spoiler: I wasn't.

But hey, Terraform 1.0 dropped just last month (June 2021, finally!), and it's a signal to all of us: Infrastructure as Code (IaC) is grown up. It's stable. So, if you are still copy-pasting main.tf files between folders like a maniac, we need to talk.

Today, I'm going to walk you through how to structure your Terraform for an actual enterprise—not a "Hello World" tutorial—so you can manage Dev, Staging, and Prod without losing your mind (or your job).

The "Copy-Paste" Trap

Here is the classic anti-pattern I see in startups that grow too fast. You build your infrastructure for dev. It works great! Then the boss says, "We need prod by Monday."

So, what do you do? You copy the dev folder, rename it prod, change a few IP addresses, and hit terraform apply.

Six months later, dev has drifted so far from prod that they look like distant cousins who only meet at funerals. You make a change in dev, it works, you try to apply it to prod, and boom—dependency errors, resource conflicts, and tears.

This is the Monolithic Folder trap. It doesn't scale.

The Solution: Modularize Like You Mean It

To fix this, we need to treat our infrastructure like software. We need Modules.

Think of modules as Lego blocks. You design a generic "VPC Block" or a "GKE Cluster Block." These blocks don't know if they are in Prod or Dev; they just know how to build a VPC or a Cluster based on the inputs you give them.

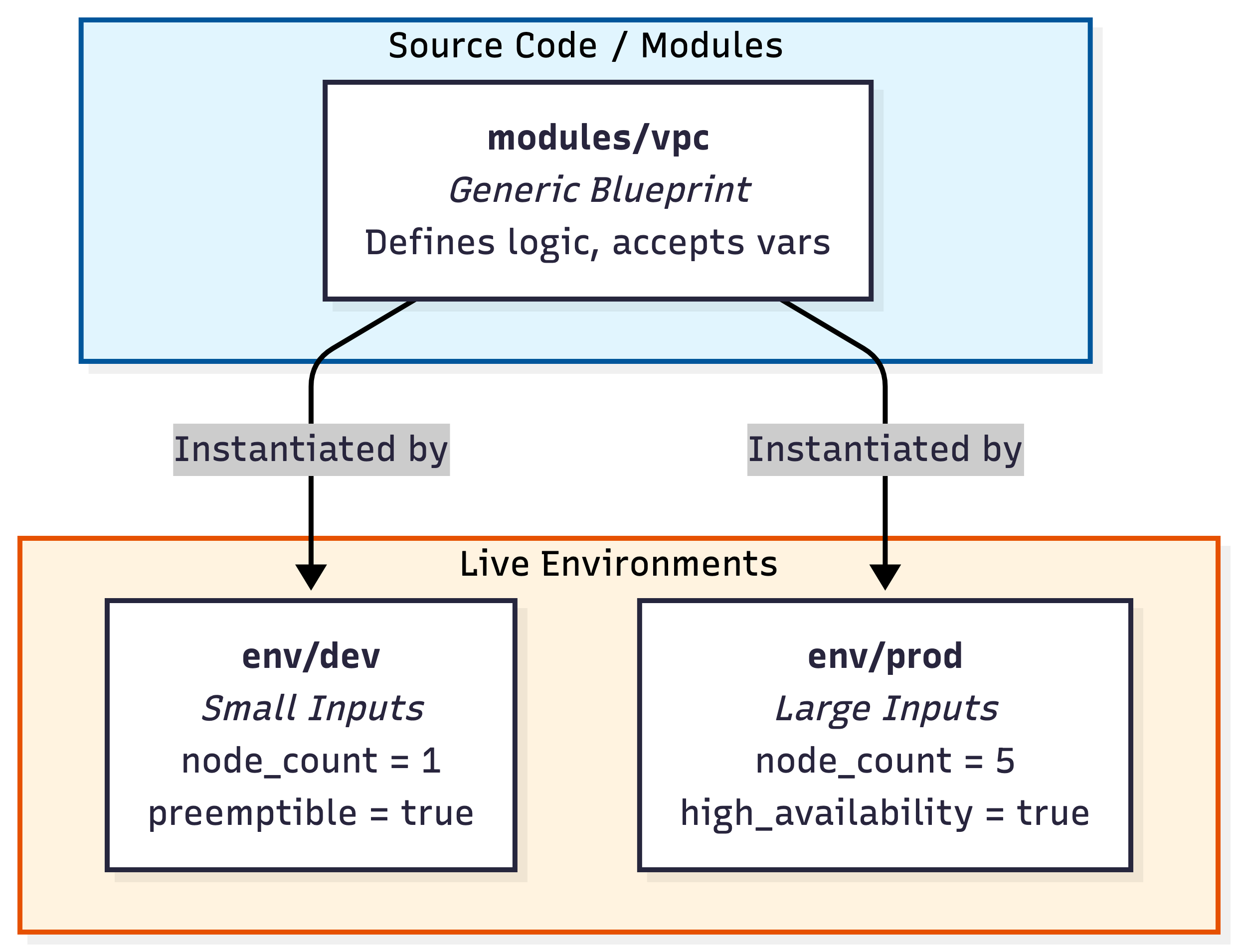

The Architecture: Blueprints vs. Houses

Here is a diagram that explains this separation:

Why this works:

- Consistency: Both environments use the exact same code for the VPC.

- Safety: You test the module updates in Dev first. If the module code is broken, you catch it there before it ever touches Prod.

The Directory Structure That Won't Make You Cry

This is the exact structure I'm recommending to my clients right now. It separates the definition of resources from the instantiation of resources.

├── modules/ # The Blueprints (Reusable code)

│ ├── networking/

│ │ ├── main.tf

│ │ ├── variables.tf

│ │ └── outputs.tf

│ ├── gke-cluster/

│ └── cloud-sql/

│

├── environments/ # The Live Infra (Where state lives)

│ ├── dev/

│ │ ├── main.tf # Calls modules with dev vars

│ │ ├── backend.tf # Remote state config (GCS bucket)

│ │ └── terraform.tfvars # e.g., node_count = 1

│ │

│ └── prod/

│ ├── main.tf # Calls SAME modules with prod vars

│ ├── backend.tf

│ └── terraform.tfvars # e.g., node_count = 5, high_memIn environments/prod/main.tf, your code looks clean and simple:

module "vpc" {

source = "../../modules/networking"

project_id = var.project_id

region = "us-central1"

# Prod specific subnet sizing

subnet_cidr = "10.0.0.0/16"

}

module "gke" {

source = "../../modules/gke-cluster"

vpc_id = module.vpc.network_id

# Prod gets the expensive stuff

machine_type = "e2-standard-4"

min_nodes = 3

}See that? No resource definitions in the environment folders. Just module calls.

The "Gotcha": The State File Monster

Here is where I see people get bit. Do NOT keep your terraform.tfstate file on your laptop. I repeat, put that thing in a remote backend immediately.

If you keep it local:

- Your teammate Rad (that's me) will try to run apply at the same time as you.

- We will corrupt the state.

- We will have a bad time.

Since we are on GCP, use a GCS bucket for your backend. It supports State Locking by default. If I'm running an apply, and you try to run one, Terraform will yell at you: "Error: State locked by Rad." This has saved my bacon more times than I can count.

Another Gotcha: Watch out for circular dependencies between modules. If Module A needs an output from Module B, but Module B needs an output from Module A to start... Terraform will just stare at you blankly. You usually solve this by breaking the modules down further or using data sources to look up resources after they are created.

Versioning: The 1.0 Promise

Now that we have Terraform 1.0, we have stability promises. But that doesn't mean you should go wild.

Best Practice: Pin your versions! In your modules, explicitly state which version of the provider you expect.

terraform {

required_providers {

google = {

source = "hashicorp/google"

version = "~> 3.70"

}

}

required_version = ">= 1.0.0"

}If you don't do this, one day the Google provider will update, change a resource definition, and your entire pipeline will break because it automatically pulled the latest version. Consistency is key, folks.

Wrap Up

Moving from a "click-and-pray" console strategy to a structured, modular Terraform setup is the difference between being an "Admin" and being a "Cloud Architect."

It takes a bit more work upfront to write the modules, sure. But the next time management asks you to spin up a new region for disaster recovery? You just create a new folder, point it to your existing modules, and look like a wizard.

Now, if you'll excuse me, I need to go refactor a client's 4,000-line main.tf file. Wish me luck.

Stay modular, Rad