Hey everyone, Rad here! Welcome back to Part 3 of our journey dismantling the Monolith.

In Part 1, we set up the Load Balancer to strangle the traffic. In Part 2, we containerized the messy Java code using Jib.

Now, we have a bunch of containers ready to deploy. But where do they live?

If you just spin up a new GCP Project for every team and let them create their own networks, you are going to end up in VPC Peering Hell. You know the drill: Team A needs to talk to Team B, so they peer. Team B needs Team C. Team A needs the on-prem VPN. Suddenly, you have a mesh network so complex that a single IP overlap brings down the whole company.

Or, you dump everyone into one giant project (the "Default" VPC approach), and suddenly the intern on the Frontend team accidentally deletes the firewall rule protecting the Database.

Neither of these works for an enterprise migration. We need structure. We need isolation. We need Shared VPC.

What is Shared VPC?

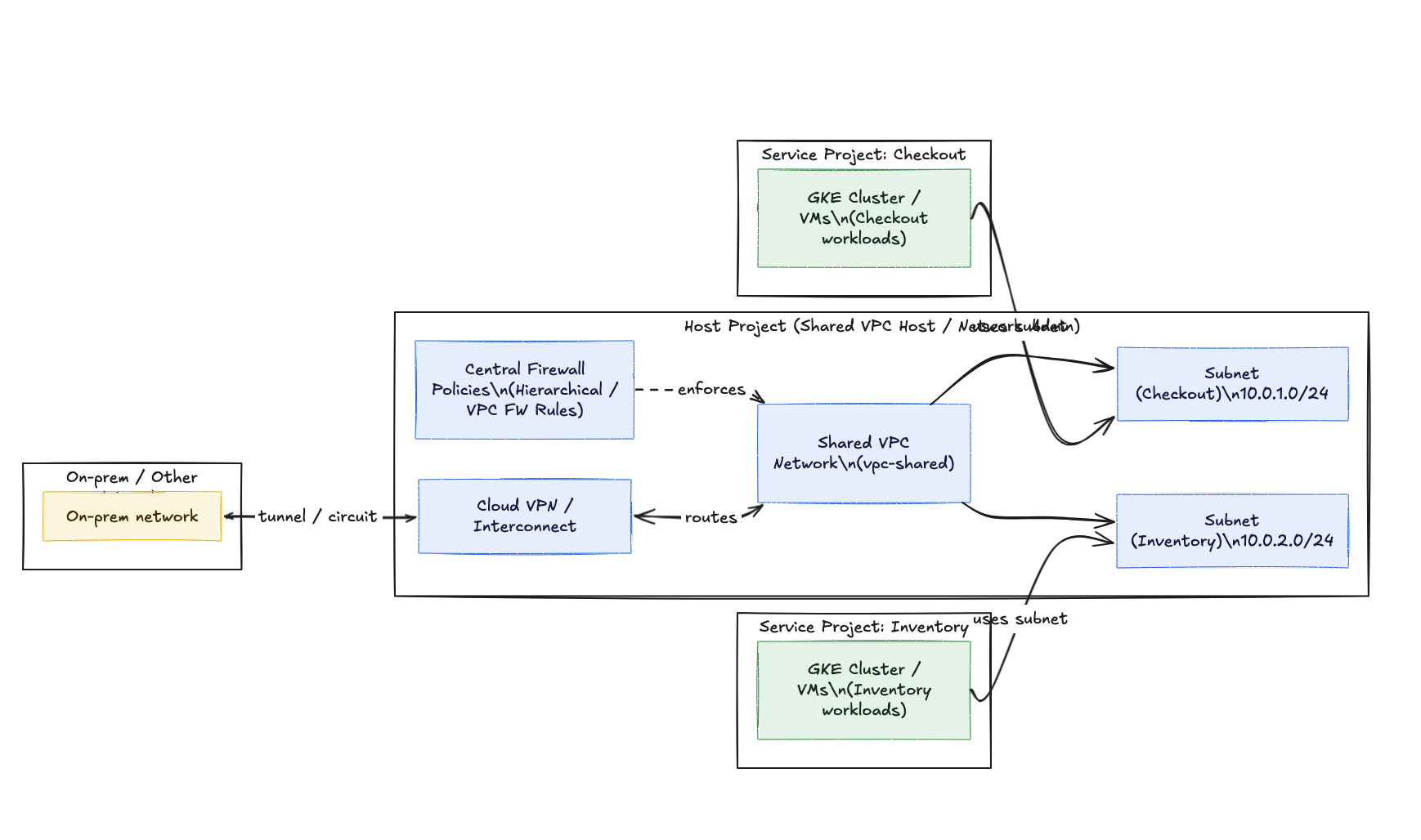

Think of Shared VPC like an apartment building.

- The Host Project (The Landlord): This project owns the building, the plumbing, and the electrical wiring (The VPC network, Subnets, VPNs, Firewalls, and Cloud NAT).

- The Service Projects (The Tenants): These are your individual teams (Checkout, Inventory, User Profile). They rent the apartments. They can bring their own furniture (VMs, GKE Clusters, Cloud Functions), but they plug into the wall sockets provided by the Landlord.

This separates Network Operations (NetOps) from Application Operations (DevOps).

The Architecture: Hub and Spoke (Sort of)

Here is the design we implemented for our migration. We wanted the "Checkout" team to move fast, but we didn't want them messing with the BGP routes back to our on-prem datacenter.

Why we did this:

- Centralized Connectivity: We only pay for one Cloud VPN or Interconnect in the Host Project. All service projects use it automatically.

- Security Boundaries: The Checkout team has Admin rights in their Service Project, but they have barely any rights in the Host Project. They can't accidentally open port 22 to the world because they don't own the firewall rules.

- Billing Separation: Even though the network is shared, the bill for the Compute (GKE Nodes, VMs) goes to the Service Project. The CFO is happy because we can still track how much money the Checkout team is burning.

The Implementation: Tying it to GKE

Since we are deploying our Jib-built containers to Kubernetes, Shared VPC gets a little spicy. GKE requires IP Aliasing (VPC-native traffic).

Here is the setup process we followed:

1. The Host Project Setup

The NetOps team creates the VPC and the subnets. Crucial Step: For GKE, a simple subnet isn't enough. You need Secondary CIDR Ranges.

- Primary Range (Nodes):

10.0.1.0/24 - Secondary Range (Pods):

10.100.0.0/20 - Secondary Range (Services):

10.200.0.0/20

2. IAM Permissions (The "Keys to the Apartment")

We had to grant the Google APIs Service Agent of the Service Projects specific permissions on the Host Project. specifically:

compute.networkUseron the specific subnets.container.hostServiceAgentUserso the GKE control plane can talk to the network.

3. Deploying the Cluster

When the Checkout team runs their Terraform to create the GKE cluster, they reference the network in the Host Project.

network = "projects/host-project-prod/global/networks/shared-vpc"

subnetwork = "projects/host-project-prod/regions/us-central1/subnetworks/checkout-subnet"Bang. The cluster spins up in the Checkout project, but its IPs come from the Host project.

Why not just use VPC Peering?

I get this question a lot. "Rad, Peering is easier. I just click 'Peer' and I'm done."

Here is why Peering fails at scale: Non-Transitivity. If Network A peers with Network B, and B peers with C... A cannot talk to C. To make A talk to C, you have to peer them directly. Now imagine you have 50 microservices. That is a full-mesh topology nightmare (n(n-1)/2 connections!).

Shared VPC avoids this. Everyone is on the same network, just segmented by subnets and firewall rules.

The "Gotchas" (The Pain Points)

Designing this looked great on the whiteboard. Implementing it? Here are the bruises we got:

1. The "Firewall Rule" Friction

The Issue: A developer on the Checkout team deploys a new service on port 8080. It doesn't work. Why? Because the firewall in the Host Project blocks it.

The Frustration: The developer cannot add a firewall rule. They have to open a ticket with the NetOps team.

The Fix: We automated this later using tags. We agreed that any VM/Node with the network tag allow-8080 gets the rule, so devs just tag their resources. (More on this in a future Governance post).

2. Running out of Pod IPs

The Issue: We underestimated how many Pods the Inventory team would run. We gave them a /22 secondary range (1024 IPs). They scaled up, ran out of IPs, and GKE refused to schedule new pods.

The Fix: You can't easily expand a secondary range in use. We had to create a new secondary range and add it to the cluster (Node masking).

Lesson: Always overestimate your Pod IP ranges. IP space is (mostly) free in the RFC1918 private world. Don't be stingy.

3. Deletion Order

The Issue: We tried to tear down a test environment. Terraform failed. The Fix: You cannot delete the Host Project Subnets while the Service Project GKE cluster is still using them. You have to kill the tenant's resources before you can demolish the building.

Wrap Up

Shared VPC is the backbone of our "Monolith to Microservices" migration. It gave us the isolation we needed for security without creating a networking spaghetti monster.

Now, we have our network, and we have our GKE clusters running. But... looking at the billing usage, and the operational overhead of managing these node pools, I'm starting to wonder if we made the right choice with GKE Standard.

Google has been pushing this new thing called GKE Autopilot. They say it manages the nodes for you and you only pay for the Pods. Is it marketing fluff, or is it actually cheaper for our workload?

Next week, I'm doing a deep dive comparison. It's GKE Standard vs. Autopilot: A Real-World Cost & Ops Analysis. I'll bring the spreadsheets.

See you then!

-Rad