Hey everyone! Rad here. Today we are tackling the elephant in the Kubernetes room: GKE Autopilot.

It's been about six months since Google dropped Autopilot into General Availability (GA), and the dust has finally settled. When it first launched, the marketing promise was tantalizing: "Kubernetes without the node management." As someone who has lost sleep because a node pool upgrade got stuck in a Repairing loop, that sounded like music to my ears.

But then came the sticker shock. "Wait, you're charging how much per vCPU?"

I've spent the last few months running parallel workloads—some on our trusty GKE Standard clusters and others on the shiny new Autopilot. I've looked at the bills, I've fought with the constraints, and I'm here to give you the no-fluff, real-world rundown. Is the "Autopilot Tax" worth the ops freedom?

Let's break it down.

The Core Difference: Who Drives the Bus?

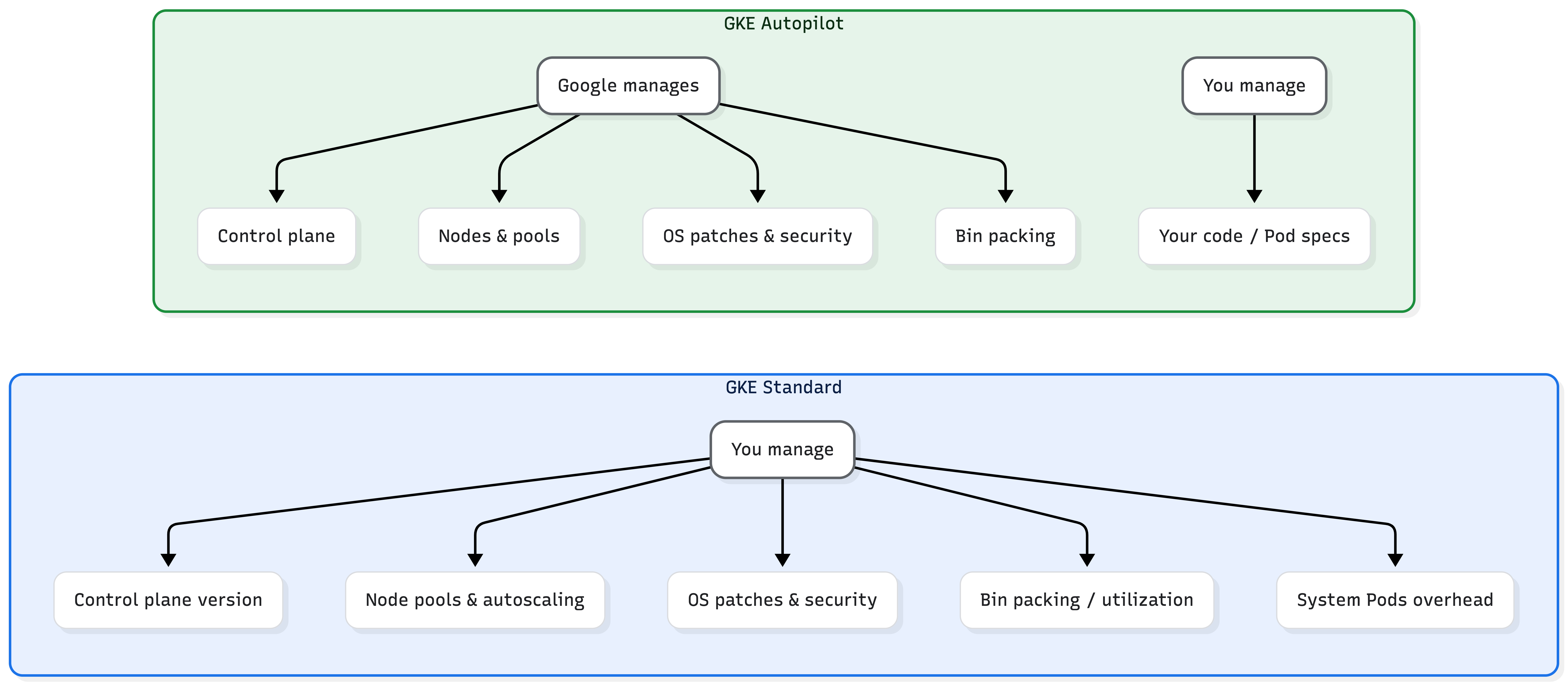

If you're new to this showdown, here is the simplest way to visualize it.

- GKE Standard is like renting a moving truck. You pay for the truck (the Nodes) regardless of whether you fill it to the brim or just put one lamp in the back. You also have to drive it, park it, and change the oil (OS patches/upgrades).

- GKE Autopilot is like hiring movers. You pay exactly for the volume of stuff you're moving (Pod resources). You don't care what truck they use or if they need to stop for gas. You just tell them where to go.

Here is a quick view of the responsibility shift:

The Cost Analysis: The "Unused Space" Trap

This is where everyone gets tripped up. On paper, Autopilot per-vCPU pricing looks roughly 2x higher than a raw Compute Engine machine type. Ouch, right?

Wrong. Well, not always wrong, but nuanced.

In GKE Standard, you are paying for Provisioned Capacity. If you spin up a node with 8 vCPUs and your apps only use 4 vCPUs, you are still paying for all 8. That 50% waste is on you. Plus, you pay for the resources consumed by the system agents (logging, monitoring, kube-proxy), which eats up a chunk of every node.

In Autopilot, you pay for Requested Capacity. If your pod requests 500m CPU, you pay for 500m. Google eats the cost of the OS overhead and the empty space on the node.

When Autopilot Wins on Cost:

- Development Environments: Dev clusters are notoriously empty at night. In Standard, even with cluster autoscaling, you have minimum node counts. In Autopilot, if you scale to zero pods, your bill is $0 (minus the negligible control plane fee).

- Spiky Workloads: If you have batch jobs that burst for an hour, Autopilot is brilliant. You don't have to worry about finding the perfect node shape to fit the job.

- Slackers (Low Bin-Packing): Be honest. Are your Standard clusters running at 90% utilization? Most I see in the wild are hovering around 30-40%. If your utilization is that low, Autopilot is actually cheaper.

When Standard Wins on Cost:

- High-Density Monoliths: If you have a massive Java app that hogs an entire machine and runs 24/7, Standard is cheaper. You can pack that node to 95% and pay the lower raw compute rate.

- Spot Instances (Currently): While Autopilot is getting there, managing Spot VMs in Standard gives you way more control over price and fallbacks right now.

The Ops Analysis: Sleep vs. Control

This is where Autopilot truly shines—but also where it bites.

The Good: Security is baked in. In Standard, hardening a cluster is a project. You need to set up Shielded Nodes, Workload Identity, network policies, etc. Autopilot enforces security best practices by default. You literally cannot deploy a privileged container. It forces your devs to write better, safer manifests.

Also, Node Management is gone. No more "cordon and drain." Google handles the churning of nodes beneath you. It is seamless.

The Bad:

It's opinionated. Very opinionated.

Do you use a third-party monitoring agent that needs to mount a host path? Denied.

Do you need to tweak sysctl parameters for high-throughput networking? Denied (mostly).

Do you rely on Mutating Webhooks to inject sidecars? It's complicated.

I recently tried to migrate a legacy app that relied on NET_ADMIN capabilities to mess with IP tables. Autopilot looked at that deployment YAML and laughed in my face. We had to rewrite the networking logic to move it.

Gotchas (Watch out for these!)

- The "250m" CPU Floor: Autopilot has a minimum pod size. Even if your tiny microservice only needs 10m CPU, Autopilot will charge you for 250m. If you run a thousand tiny pods, this adds up fast.

- The DaemonSet Dilemma: In Standard, DaemonSets are "free" (they run on the space you already paid for). In Autopilot, you pay for every replica of that DaemonSet. If you run Datadog or Splunk agents, do the math first.

- Storage Costs: Autopilot defaults to balanced disks. If you need high IOPS SSDs, make sure you specify the storage class, or you might hit performance bottlenecks (or unexpected bills).

The Verdict

So, which one should you pick?

Go with GKE Autopilot if:

- You want a "SRE-in-a-box" experience.

- You are building new, cloud-native apps that don't require root access.

- Your cluster utilization is generally low or highly variable (Dev, Staging, Batch).

- You hate upgrading Kubernetes versions.

Stick with GKE Standard if:

- You are a "Power User" who needs to tune the kernel or run privileged pods.

- You have predictable, high-throughput workloads where you can achieve >70% node utilization.

- You rely heavily on custom 3rd party agents that need deep host access.

Personally? I've moved all our staging environments and our client-facing web tier to Autopilot. The peace of mind is worth the slight premium. But for our backend data processing crunchers? I'm keeping those on Standard... at least until I can figure out how to optimize those bills!

Catch you in the next one (where I might finally tackle Shared VPCs without crying).

- Rad