Hello again! Rad here. If you caught Part 1, you saw how we used the Strangler Fig pattern with Google Cloud Load Balancing to route traffic away from our monolithic beast.

But that begs the question: Route traffic to what, exactly?

We aren't routing to another VM. We aren't savages. We are routing to containers.

Now, if you are a Java developer (or managing a team of them), you know that "containerizing Java" usually sounds like the start of a bad joke. You've got your massive mvn clean install, your JAR/WAR files, and then... the dreaded Dockerfile.

For this project, we had a decade of legacy Java code. We tried the standard Dockerfile approach first. It was painful. It was slow. It made my laptop sound like a jet engine.

So, we threw the Dockerfiles in the trash. We switched to Jib and Cloud Build. Here is why you should too, and how it saved our migration timeline.

The Problem: "The Layering Cake from Hell"

Here is the reality of a legacy Java Monolith-turned-Microservice. You have:

- Application Code: Maybe 5MB. Changes constantly.

- Dependencies (The

libfolder): Spring Boot, Hibernate, Apache Commons, and half the internet. Easily 200MB+. Changes rarely.

When you write a standard Dockerfile like this:

FROM openjdk:11-jre-slim

COPY target/my-app-1.0.jar /app.jar

ENTRYPOINT ["java", "-jar", "/app.jar"]You are doing it wrong.

Every time you change one line of code in your app, Docker sees the COPY command has changed. It invalidates the cache. It rebuilds the entire layer. You are re-uploading that 200MB of dependencies to your registry every. Single. Build.

We were waiting 10 minutes for builds to push. Developers were sitting idle, realizing it failed, restarting, and repeating the cycle. Productivity was tanking.

The Solution: Jib (Java Image Builder)

Enter Jib. It's an open-source tool from Google that integrates directly into Maven or Gradle.

Here is the pitch that sold me: Jib separates your application into layers based on volatility.

- Layer 1: Dependencies (The heavy stuff).

- Layer 2: Resources.

- Layer 3: Classes (Your actual code).

When you rebuild, Jib says, "Oh, you only changed UserController.java? Cool. I'll just push this tiny 5KB layer. The 200MB of Spring JARs are already in the registry."

The "No-Docker" Magic

The best part? You don't need the Docker daemon. Jib builds the image bits and pushes them directly to the registry (Artifact Registry or GCR). This is huge for CI/CD pipelines because running "Docker inside Docker" for builds is a security nightmare and a performance bottleneck.

The Pipeline: Cloud Build + Jib

We paired Jib with Google Cloud Build. Why? Because I don't want to manage a Jenkins server. I want to pay for the build seconds I use and not worry about patching the build OS.

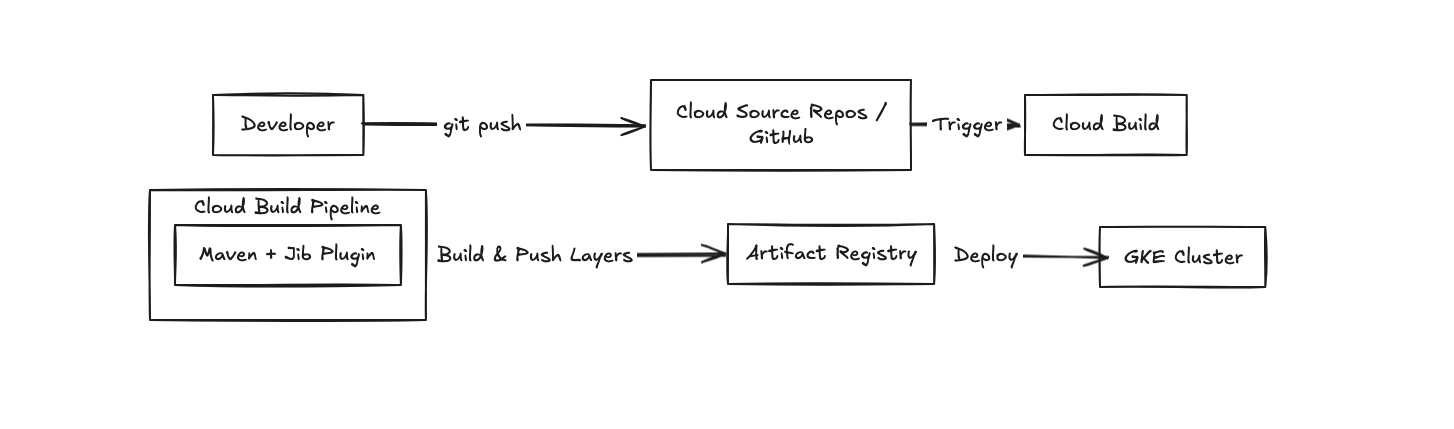

Here is what our new flow looks like:

The Configuration

In our pom.xml, we just added this:

<plugin>

<groupId>com.google.cloud.tools</groupId>

<artifactId>jib-maven-plugin</artifactId>

<version>3.1.4</version>

<configuration>

<to>

<image>gcr.io/my-project/my-service</image>

</to>

<container>

<!-- Optimization: Use Distroless for security! -->

<mainClass>com.example.MyApplication</mainClass>

</container>

</configuration>

</plugin>And our cloudbuild.yaml became ridiculously simple. No docker build. No docker push. Just Maven.

steps:

- name: 'gcr.io/cloud-builders/mvn'

args: ['compile', 'jib:build']That's it. That's the tweet.

Why We Switched (The Benefits)

- Speed: Builds went from 10 minutes to roughly 45 seconds on incremental changes.

- Security (Distroless): Jib defaults to "Distroless" Java images. These images don't have a shell (

/bin/bash), package managers, or other bloat. If a hacker gets into your container, they can't even runls. It reduces the attack surface massively. - Reproducibility: Jib ensures that if the inputs are the same, the image ID is the same. No more "it works on my machine" because of some weird OS timestamp drift.

The "Gotchas" (Watch Out for These)

It wasn't all sunshine and rainbows. Here is what tripped us up:

1. The "Exploded" WAR issue

Our legacy app relied on the WAR being unpacked (exploded) to read some weird file system paths relative to the servlet context.

- The Fix: Jib creates an image where the app is exploded by default (unlike the "Fat JAR" method). However, we had to hardcode some paths in our legacy code to stop assuming

catalina.base.

2. Private Repositories

Cloud Build couldn't reach our private Nexus repository where some internal shared JARs lived.

- The Fix: We had to encrypt our

settings.xmlwith Google Cloud KMS (Key Management Service) and decrypt it inside the Cloud Build step so Maven could authenticate.

3. Local Dev vs. Cloud

Developers still wanted to run things locally.

- The Fix: Jib has a

jib:dockerBuildgoal that does use the local Docker daemon if you want to run it on your laptop. Best of both worlds.

Wrap Up

By switching to Jib and Cloud Build, we removed the friction of containerization. The team stopped fighting Dockerfile syntax and started focusing on breaking apart the Monolith.

We now have fast, secure, and small containers landing in Artifact Registry.

But wait...

Now we have these microservices running in containers. Great. But how do we make sure Team A's "Cart Service" doesn't accidentally talk to Team B's "Inventory Service" if it's not supposed to? And how do we manage networking when we have dozens of teams deploying to the cloud?

We can't just dump everyone into the default VPC network and hope for the best.

Next month in Part 3, we are diving into Networking. I'm going to show you how we designed a Shared VPC architecture to give service teams isolation while keeping centralized control over the network. It's going to be a deep dive into subnets, host projects, and avoiding IP overlapping hell.

See you in September!

-Rad