Alright team, gather 'round. We need to talk about Airflow.

If you've been running Apache Airflow on GCP using Cloud Composer 1 (CC1), you probably have a love-hate relationship with it. You love the managed service convenience, but you hate the bill—and you definitely hate waiting 15 minutes for a node to spin up when your morning ETL crunch hits.

Well, Google just dropped Cloud Composer 2 into General Availability, and let me tell you: this isn't just a version bump. It's a complete architectural overhaul.

I've spent the last two weeks migrating a few of our heavy data pipelines to CC2, and the difference is night and day. It basically fixes the biggest gripe I had with CC1: Rigidity.

Here is why this update matters and why you should care about the architecture shift under the hood.

The Old World: The "Fixed Box" Problem (CC1)

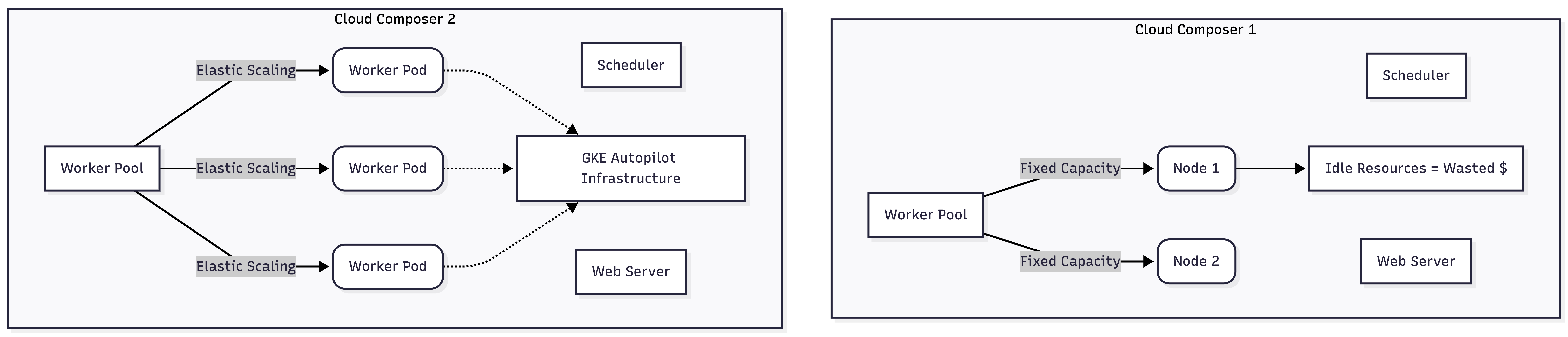

In Cloud Composer 1, when you created an environment, you were basically spinning up a GKE Standard cluster in your project. You could see the nodes in your Compute Engine console. You had to decide upfront: "Do I want 3 nodes or 30?"

If you picked 3, your morning tasks choked and died. If you picked 30, you were burning cash all night while the cluster sat idle doing absolutely nothing.

Scaling was possible, but it relied on the GKE Cluster Autoscaler, which is... let's say deliberate. It takes time to provision a VM, boot the OS, pull images, and join the cluster. By the time the extra capacity arrived, your DAG had arguably already failed or missed its SLA.

The New World: Elasticity via GKE Autopilot (CC2)

Remember my last post about GKE Autopilot? Well, Google clearly drank their own champagne here. Cloud Composer 2 runs on GKE Autopilot.

This changes the game. You no longer manage nodes. You don't even see the nodes. Instead, the Airflow Workers are just pods that scale horizontally almost instantly based on the number of tasks in your queue.

Here is the architectural shift in a nutshell:

Why "Split Architecture" Wins

In CC2, the environment is split into two parts:

- The Tenant Project (Google Managed): This hosts your Cloud SQL (metadata DB) and the Web Server. This is huge because it means the Web Server is no longer fighting your workers for resources on the same cluster. No more UI timeouts just because a heavy Python task is hogging memory!

- The Customer Project (Your Project): This hosts the GKE Autopilot cluster where your Schedulers and Workers run.

Because of this split and the Autopilot backend, Autoscaling is now task-driven, not CPU-driven.

If you have 50 tasks queued up, CC2 screams, "GIVE ME PODS!" and Autopilot provisions them. When the tasks are done, the pods die, and—crucially—you stop paying.

The "Gotchas" (Because nothing is perfect)

Of course, I wouldn't be doing my job if I didn't warn you about the potholes I stepped in during migration.

- VPC Native is Mandatory: CC2 requires a VPC Native (Alias IP) network setup. If you are still running legacy networks or haven't configured your secondary IP ranges for pods/services, you're going to have a bad time. You can't deploy CC2 on a non-VPC-native network.

- PyPI Packages & Private IPs: Since the architecture is split, if you are running a Private IP environment (which you should be for security), your workers need a way to reach the internet to fetch PyPI packages, or you need to set up a private repository (like Artifact Registry). I spent three hours debugging a

pip installfailure because my Cloud NAT wasn't configured correctly for the new Autopilot subnet. - Cost Structure Change:

- CC1: High base cost (paying for nodes 24/7).

- CC2: Lower base cost (small environment fee) + Variable compute cost.

- Warning: If you have a badly written DAG that loops infinitely or spins up 1,000 tasks by mistake, CC2 will happily scale up to run them all, and your bill will explode. Set your maximum worker caps immediately!

Is it worth the migration?

Yes.

For us, the biggest win wasn't just cost (though we are saving about 20% on dev environments); it was reliability. The separation of the Web Server/DB from the execution layer means the Airflow UI is snappy even when the cluster is on fire processing data.

Plus, not having to patch GKE nodes manually? That's just the cherry on top.

If you're still on CC1, start planning your move. The grass really is greener on the Autopilot side.

See you next month—I'm diving into Event-Driven Architectures and trying to get Pub/Sub to talk to everything without making a mess.

- Rad