What. A. Year.

If you are reading this, congratulations—you survived 2021. You survived the supply chain shortages, the endless Zoom calls, the "Great Resignation," and, just when we thought we could coast into the holidays, the internet caught fire with Log4j (more on that nightmare later).

Every December, I like to look back at the architectural shifts we've made. Last year (2020) was all about "Panic Migration"—getting to the cloud because the physical office ceased to exist. But 2021? 2021 was the year we grew up. It was the year we stopped just "running servers" in the cloud and started actually engineering reliability.

It was the year my team finally transitioned from "Ops" to "SRE" (Site Reliability Engineering).

Now, I know "SRE" is a buzzword that recruiters love to slap on job descriptions for SysAdmins to justify a salary bump. But for us, on Google Cloud Platform, the shift was real, painful, and absolutely necessary.

Let's break down what this journey looked like, the tools that made it possible, and why "Hero Culture" is the enemy of stability.

The "Ops" Trap: Why We Had to Change

In early 2021, we were drowning. We had successfully migrated a massive chunk of our workload to GCP, but we were managing it like an on-prem data center.

Our workflow looked like this:

- Ticket Driven: Developers threw code over the wall. If it broke, they opened a Jira ticket for Ops.

- Alert Fatigue: Our PagerDuty went off for everything. CPU > 60%? Page. Disk > 80%? Page. One pod restarting? Page. We were waking up at 3 AM for issues that auto-resolved themselves in 2 minutes.

- Heroics: We relied on "Heroes"—that one senior engineer (often me, unfortunately) who knew exactly which script to run when the database locked up.

This is not scalable. It's a recipe for burnout. We were doing Operational Drudgery, or what Google calls Toil.

The Definition of Toil: Manual, repetitive, automate-able, tactical, devoid of enduring value, and scaling linearly as the service grows.

We decided to adopt the Google SRE philosophy: SRE is what happens when you treat operations as a software problem.

Pillar 1: Killing "ClickOps" with Infrastructure as Code

The first rule of SRE club: No Console Changes.

In the old days, if we needed a firewall rule, I'd log into the GCP Console, click VPC, click Firewall, and add it. Fast, right? Wrong. Because six months later, nobody knows why that rule exists, and if we try to replicate the environment in a new region, we forget it.

In 2021, we went 100% Terraform.

We restructured our entire GCP organization using the Google Cloud Foundation Toolkit. We stopped building "snowflake" servers. We started building modules.

- Need a GKE cluster? Call the

terraform-google-modules/kubernetes-enginemodule. - Need a project? There's a module for that.

The Win: When Log4j hit us a couple of weeks ago, we didn't have to manually check 500 servers. We scanned our Terraform state and our Artifact Registry. We knew exactly what was running where.

Pillar 2: Moving from "Uptime" to "User Happiness" (SLIs and SLOs)

This was the hardest cultural shift.

Traditional Ops cares about: "Is the server up?" SRE cares about: "Is the user happy?"

We implemented Service Level Indicators (SLIs) and Service Level Objectives (SLOs) using Cloud Monitoring.

The realization: A server can be "up" (responding to ping) but serving 500 errors. Or it can be serving 200 OKs, but taking 10 seconds to do it. In both cases, the server is "up," but the user is furious.

We defined our Golden Signals:

- Latency: How long does it take?

- Traffic: How much demand is there?

- Errors: How many requests fail?

- Saturation: How "full" is the service?

We set up SLO Widgets in the GCP Console. Instead of alerting on "CPU High," we alert on "Burn Rate."

- Alert me if we have burned through 2% of our Error Budget in the last hour.

This silenced 90% of our pagers. If CPU is at 95% but latency is fine and errors are zero... let it burn. The users are happy, so I'm going back to sleep.

Pillar 3: Reducing Toil with GKE Autopilot

I have written about this before, but it bears repeating. GKE Autopilot (which went GA this year) was a game-changer for our SRE maturity.

In GKE Standard, we spent hours managing node pools. "Oh, this pool needs an upgrade." "Oh, this bin-packing is inefficient." "Oh, we need to patch the OS." That is Toil. It adds no value to the business.

We moved our stateless microservices to Autopilot. Google manages the nodes. Google patches the OS. We just pay for the pods. Suddenly, my team had 10 extra hours a week. We used that time to build better CI/CD pipelines and automated load tests.

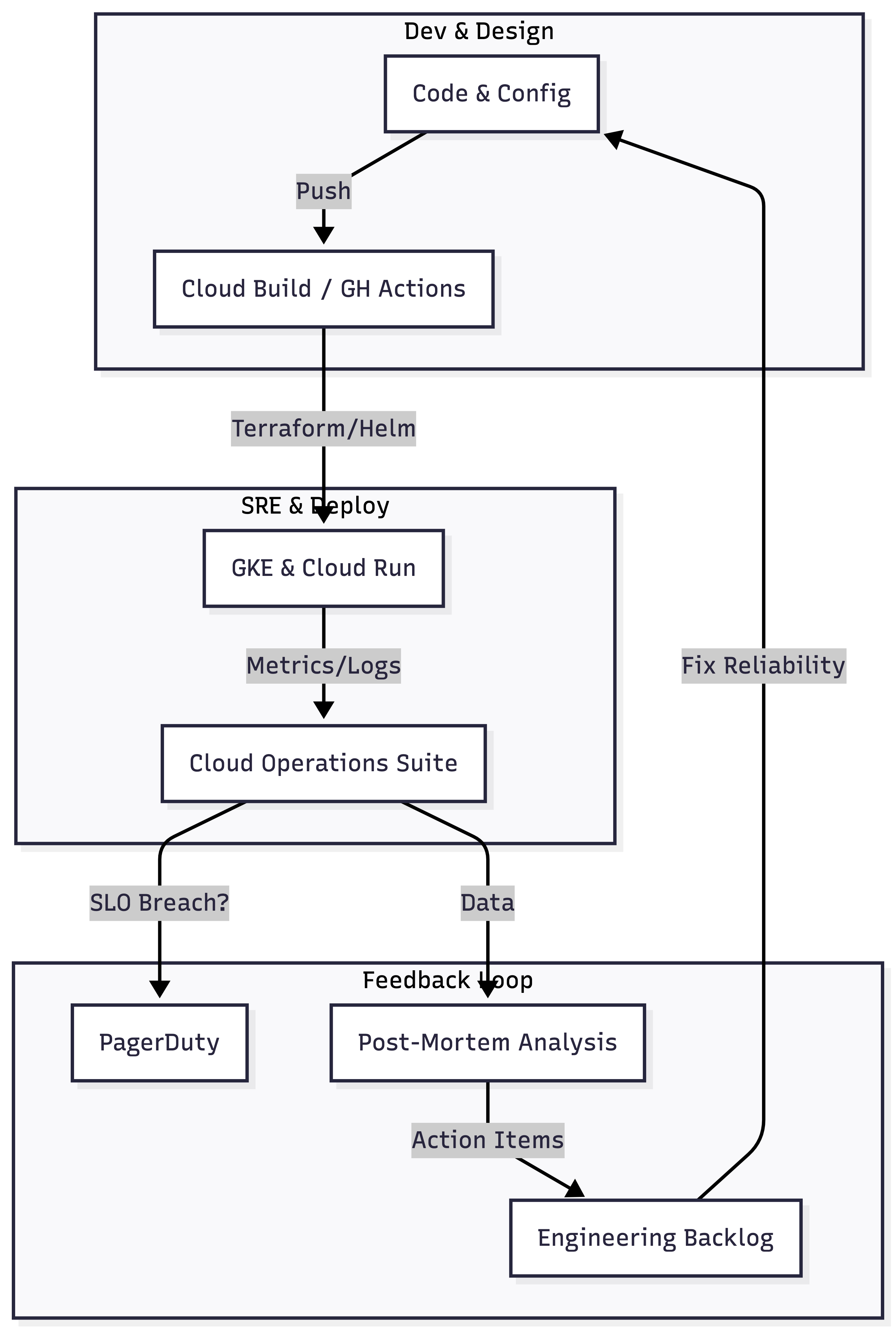

The SRE Workflow: A Visual Guide

Here is how our feedback loop looks now at the end of 2021. It's circular, not linear.

Notice the Post-Mortem box. This is key. When things break (and they do), we don't fire people. We write a doc.

- What happened?

- Why? (The 5 Whys technique)

- How do we prevent it automatically next time?

Rad's "Gotchas" Corner: The 2021 Scars ⚠️

It wasn't all smooth sailing. Here is where we tripped up this year.

1. The "100% Reliability" Fallacy Management came to me and said, "We want 100% availability." I had to tell them: "No, you don't." 100% is infinitely expensive. It means you can never update anything. We settled on 99.9%. That gives us an Error Budget of roughly 43 minutes of downtime per month. We use that budget to push updates fast. If we run out of budget? We freeze deployments until next month. That is the SRE deal.

2. Observability Costs Money We turned on everything in Cloud Logging and Cloud Trace. We logged every HTTP header, every payload. Then we got the bill. Lesson: Sampling is your friend. You don't need to trace 100% of requests to catch a latency issue. 5% is usually enough. Use Log Exclusion rules in Cloud Logging to drop the noise (like successful health checks) before they hit the storage bucket.

3. The Log4j Panic (Dec 2021 Edition) When the vulnerability dropped a few weeks ago, the difference between "Ops" and "SRE" became clear.

- Ops Approach: SSH into every box, grep for jar files, panic, patch manually.

- SRE Approach: We used GCP Security Command Center and Container Analysis. We queried our Software Bill of Materials (SBOM). We applied a Cloud Armor WAF rule to block the JNDI attack strings globally at the load balancer level while we patched the backend images in our CI/CD. It turned a catastrophe into a managed incident.

Looking Ahead to 2022

So, what is next? We are pretty good at "Day 2 Operations" now. For 2022, my eyes are on:

- Supply Chain Security: Binary Authorization. Ensuring that the code in production is actually what we built. (Thanks, Log4j, for highlighting this).

- FinOps: SREs are also responsible for cost. If a query costs $50 to run, it's a bug, even if it returns the right result.

- Chaos Engineering: We are starting to look at breaking things on purpose in staging to see if our alerts actually fire.

Final thought for the year: If you are still waking up at 3 AM for disk space alerts, make 2022 the year you stop. Automate the cleanup, or increase the disk size automatically. Be a reliability engineer, not a server babysitter.

Happy New Year, folks. May your latencies be low and your uptime be high (but not 100%).

See you in '22!

-Rad